Support vector machines are designed for robustness and versatility. It serves as a powerful technique in machine learning and artificial intelligence. Born from the confluence of mathematics and computer science, SVMs are designed for classification and regression tasks. It helps to find optimal hyperplanes in high-dimensional spaces. This article will provide an in-depth overview of support vector machines. So keep reading till the end of this article to get a basic understanding of support vector machine.

Understanding Support Vector Machines

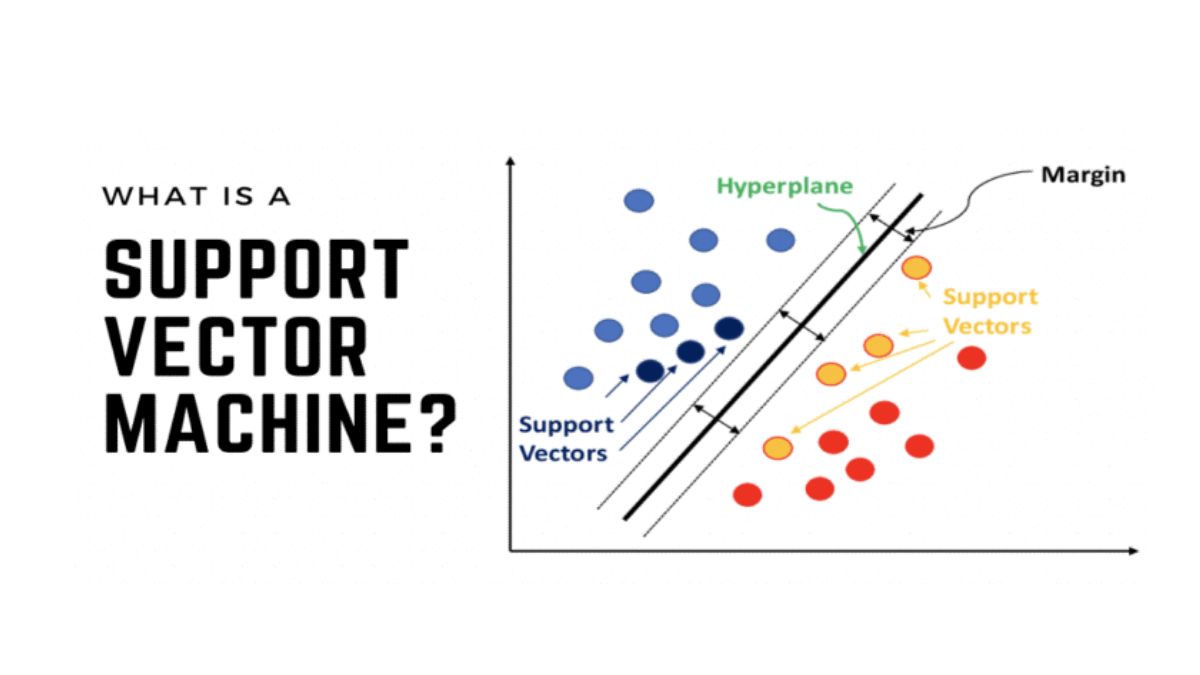

At its core, the Support Vector Machine is a supervised learning algorithm used for classification and regression tasks. Its primary objective is to find the best possible boundary, known as a hyperplane, that separates data points of different classes in a feature space. This hyperplane is selected in such a way that it maximizes the margin between the two classes, ensuring the greatest separation and subsequently enhancing the algorithm’s predictive power on unseen data.

The term support vector refers to the data points that lie closest to the decision boundary. These support vectors are crucial, as they play a pivotal role in defining the hyperplane and the margin. SVM seeks to find a hyperplane that not only separates the classes but also maintains the maximum distance from the nearest support vectors. This margin maximization minimizes the risk of overfitting and promotes better generalization to new data.

Key Components of support vector machine

Kernel Trick: One of the factors that distinguishes SVM from other algorithms is its use of the kernel trick. This technique allows SVM to operate effectively in non-linearly separable data by transforming the feature space into a higher-dimensional space where the classes might be linearly separable. Popular kernel functions include linear, polynomial, radial basis function (RBF), and sigmoid, each suited for specific scenarios.

Regularization: SVM employs regularization parameters to balance the importance of achieving a wide margin and minimizing the classification error. This parameter, often denoted as ‘C,’ influences the trade-off between maximizing the margin and correctly classifying training examples. A small ‘C’ value leads to a wider margin but may allow for some misclassifications, while a large ‘C’ value aims for accurate classification even if the margin is narrower.

Soft Margin: In cases where the data is not perfectly separable, SVM introduces the concept of a soft margin. This means that the algorithm allows for a certain degree of misclassification in exchange for a more generalized model. Soft margin SVMs are especially useful when dealing with noisy or overlapping data.

Applications of support vector machine:

Support Vector Machines find applications across a wide array of domains, owing to their versatility and efficiency:

- Image Classification: SVMs have been widely employed in image classification tasks, such as object recognition in computer vision. By converting images into feature vectors, SVMs can distinguish between different objects or entities within images.

- Text Classification: In natural language processing, SVMs are used for tasks like sentiment analysis, spam detection, and text categorization. They can effectively separate and categorize text documents based on their content.

- Bioinformatics: SVMs have shown remarkable efficacy in bioinformatics, aiding in tasks like protein structure prediction, gene classification, and disease identification through genetic data analysis.

- Finance: SVMs are used in financial markets for tasks like stock price prediction, credit risk assessment, and fraudulent transaction detection.

Support vector machine Advantages

- Effective in High-Dimensional Spaces

- Robust to Overfitting

- Flexibility through Kernels

- Clear Decision Boundaries

- Effective in High-Dimensional Spaces: SVMs excel in scenarios where the data points exist in high-dimensional spaces, making them suitable for various real-world applications.

- Robust to Overfitting: By maximizing the margin and using regularization, SVMs resist overfitting, delivering strong generalization capabilities.

- Flexibility through Kernels: The ability to employ different kernel functions allows SVMs to handle complex data distributions and achieve high accuracy.

- Clear Decision Boundaries: SVMs provide easily interpretable decision boundaries, making them valuable in scenarios where explainability is crucial.

Final words

The Support Vector Machine stands as a versatile and potent tool in the machine learning arsenal. Its ability to find optimal hyperplanes, adapt to nonlinear data through kernels, and provide strong generalization makes it a go-to choice for classification and regression tasks across diverse domains. As technology continues to evolve, SVM’s foundational principles and advanced methodologies will likely continue to contribute significantly to the field of artificial intelligence.